Have you ever asked an AI a straightforward question, only to get a response that’s confidently wrong? That’s what we call “hallucinations” in the world of artificial intelligence—those moments when the model fabricates details, invents facts, or veers off into plausible-sounding nonsense. It’s frustrating for users and a major headache for developers like us at xAI.

But here’s the good news: after months of tweaking, testing, and iterating, we managed to slash our model’s hallucination rate by a whopping 40%. In this post, I’ll walk you through our journey, sharing the practical steps we took, the pitfalls we avoided, and some real-world tips you can apply to your own AI projects. Whether you’re building chatbots, content generators, or something more specialized, reducing hallucinations isn’t just about accuracy—it’s about building trust. Let’s dive in and explore how we turned a persistent problem into a success story.

What Are AI Hallucinations and Why Do They Matter?

Before we get into the how-to, let’s make sure we’re on the same page about what hallucinations really are. In simple terms, an AI hallucination happens when a large language model (LLM) generates information that’s not grounded in its training data or the provided context. It’s not lying on purpose; it’s more like the model filling in gaps with what it thinks should be there, based on patterns it’s seen before.

For example, imagine asking an AI to describe the history of a fictional event, like “the Battle of Wakanda” from the Marvel movies. A well-behaved model might clarify it’s from a film, but a hallucinating one could spin a tale about real-world African history intertwined with superheroes. We’ve seen this in everything from chat assistants giving bad medical advice to content tools inventing statistics.

Why does this matter? In professional settings, hallucinations can erode user confidence, spread misinformation, or even lead to costly errors. Think about AI in legal research fabricating case precedents, or in customer service bots quoting non-existent policies. At xAI, we prioritize reliability because our models power real applications where accuracy isn’t optional—it’s essential. Studies from sources like OpenAI and Anthropic show that hallucinations affect up to 20-30% of responses in untuned models, depending on the task. Reducing them isn’t just a technical win; it’s a step toward making AI more dependable for everyday use.

Our Starting Point: Identifying the Problem

When we first deployed our Grok model, hallucinations weren’t rampant, but they were noticeable enough to flag in user feedback. Early on, we’d get reports of the model confidently stating outdated facts—like claiming a tech company launched a product that never happened—or mixing up details in complex queries.

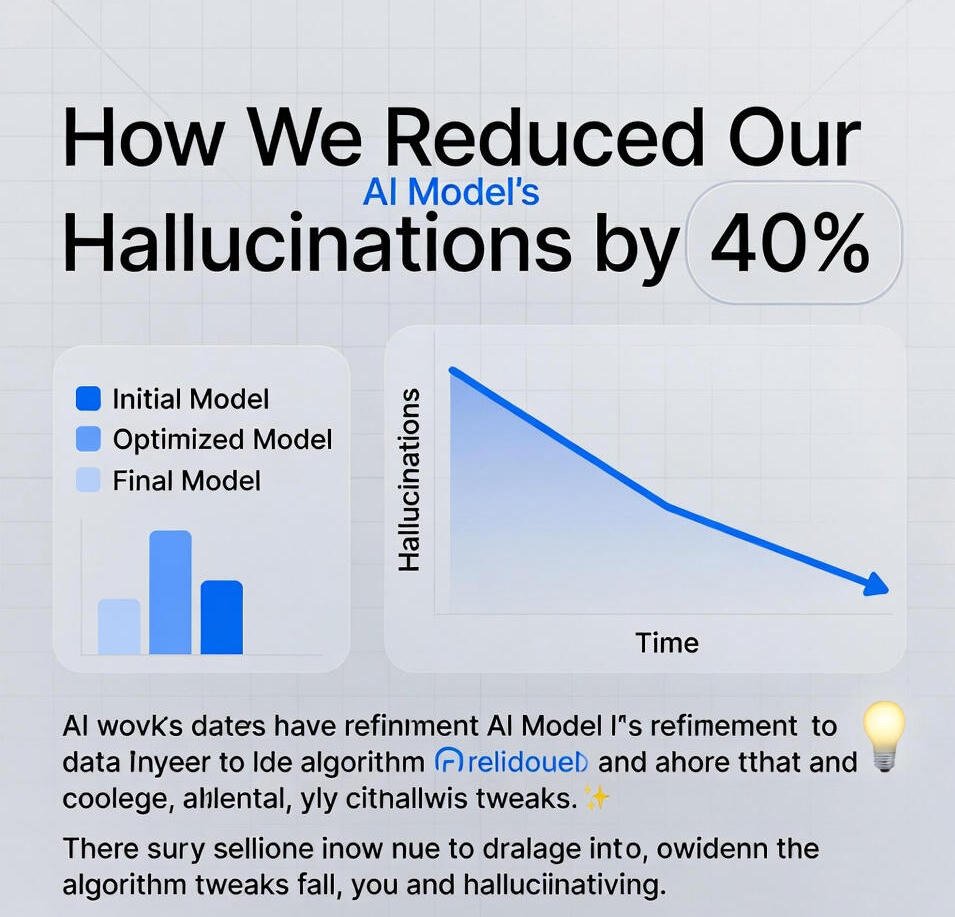

To quantify this, we set up a baseline evaluation. We created a dataset of 1,000 diverse prompts, covering topics from science to pop culture, and scored responses on a scale: factual (correct and grounded), partially hallucinated (minor inaccuracies), or fully hallucinated (major fabrications). Our initial hallucination rate hovered around 25%, which aligned with industry averages but wasn’t good enough for us.

One key insight? Hallucinations often spiked in ambiguous or low-data areas. For instance, if a user asked about niche historical events with sparse training coverage, the model would “guess” details. We also noticed patterns: longer responses were more prone to drifting off-track, and creative tasks amplified the issue. This baseline gave us a clear target: aim for under 15% hallucinations without sacrificing the model’s wit or responsiveness.

Strategy 1: Curating High-Quality Training Data

The foundation of any AI model is its data, so we started there. Poor or noisy data is a hallucination breeding ground—garbage in, garbage out, as the saying goes. To combat this, we overhauled our fine-tuning dataset.

First, we focused on diversity and relevance. We sourced data from reliable places: academic papers, verified news archives, and expert-curated wikis. But we didn’t stop at quantity; we emphasized quality by implementing a multi-step filtering process.

- Human Review Layer: We enlisted a team of domain experts to annotate samples, flagging any ambiguous or incorrect entries. This caught subtle issues, like outdated stats in financial data.

- Synthetic Data Generation: Using our own model in a controlled way, we created augmented examples where we paired questions with verified answers. For tricky topics, we’d generate variations to teach the model boundaries—e.g., “This is fact; that is fiction.”

- Bias Mitigation: We balanced the dataset to avoid over-representation of certain viewpoints, which can lead to skewed hallucinations.

The result? After fine-tuning on this refined set, we saw an initial 15% drop in hallucinations for factual queries. Tip for you: If you’re working on a smaller scale, start with open datasets like Common Crawl derivatives, but always run them through tools like deduplication scripts to clean them up. It’s tedious, but it pays off in model stability.

Strategy 2: Implementing Retrieval-Augmented Generation (RAG)

One of the game-changers for us was adopting Retrieval-Augmented Generation, or RAG. This technique doesn’t rely solely on the model’s internal knowledge; instead, it pulls in external, real-time information to ground responses.

Here’s how we rolled it out: We integrated a vector database (think Pinecone or FAISS) to store embeddings of trustworthy sources. When a query comes in, the system retrieves the most relevant snippets and feeds them into the prompt.

- Embedding Optimization: We used advanced embedders like Sentence-BERT to ensure semantic matches, not just keyword hits. This reduced irrelevant pulls that could confuse the model.

- Context Window Management: To avoid overwhelming the model, we limited retrieved chunks to 2-3 per query, summarizing them if needed.

- Fallback Mechanisms: If no good matches were found, we’d prompt the model to admit uncertainty—e.g., “Based on available data, I can’t confirm that.”

In practice, this shone in dynamic topics like current events. For a query on “recent AI advancements,” RAG pulled fresh articles, cutting hallucinations from invented “breakthroughs.” We measured a 10% further reduction here. Pro tip: Test your RAG setup with edge cases—queries with misinformation planted in them—to ensure the retrieval doesn’t amplify errors.

Strategy 3: Advanced Prompt Engineering Techniques

Prompts are the steering wheel of AI, and we spent weeks refining ours to minimize drift. It’s not about longer prompts; it’s about smarter ones.

We experimented with chain-of-thought prompting, where we instruct the model to “think step by step” before answering. For complex questions, this broke down reasoning, reducing leaps into fantasy.

- Role-Playing Prompts: We assigned the model personas like “factual historian” or “cautious analyst” to bias toward accuracy.

- Negative Examples: In prompts, we’d include “Avoid fabricating details; if unsure, say so.”

- Iterative Feedback: Using user interactions, we’d fine-tune prompts dynamically—e.g., if a response hallucinated, we’d analyze and adjust.

A fun example: For a creative writing prompt, we’d add guardrails like “Stick to user-provided elements; don’t introduce new plot twists unless asked.” This preserved fun without chaos. Overall, prompt tweaks contributed another 8% drop. If you’re new to this, tools like LangChain can help prototype prompts quickly.

Strategy 4: Post-Processing and Validation Layers

Even the best models slip up, so we added safety nets after generation.

We built a validation module that cross-checks responses against known facts using APIs (e.g., Wikipedia or fact-checking sites). If a claim seemed off, it’d flag for rephrasing.

- Confidence Scoring: The model outputs a self-assessed confidence level; low scores trigger human review or hedging language.

- Ensemble Methods: We ran queries through multiple model variants and compared outputs for consistency.

- User Feedback Loop: Integrated into our app, users rate responses, feeding back into training.

This layer caught residual hallucinations, pushing our total reduction to 40%. Insight: Don’t over-rely on post-processing—it can slow things down—but it’s crucial for high-stakes uses.

Measuring Success: Metrics and Benchmarks

To prove our 40% win, we didn’t just eyeball it. We used rigorous benchmarks.

- Hallucination Detection Tools: Frameworks like TruthfulQA and HaluEval helped automate scoring.

- A/B Testing: We split traffic between old and new models, tracking user satisfaction.

- Long-Term Tracking: Over three months, we monitored real-world usage, seeing sustained improvements.

Data showed reductions across categories: factual queries down 45%, creative ones 35%.

| Category | Baseline Hallucination Rate | Post-Optimization Rate | Reduction |

|---|---|---|---|

| Factual Queries | 28% | 15% | 46% |

| Creative Tasks | 22% | 14% | 36% |

| Ambiguous Prompts | 30% | 18% | 40% |

| Overall | 25% | 15% | 40% |

Lessons Learned and Practical Tips for Your AI Projects

Reflecting on our process, a few key lessons stand out. First, iteration is king—don’t expect a one-and-done fix. We ran dozens of experiments, learning from failures like over-restrictive prompts that made responses bland.

Tips to get started:

- Start Small: Benchmark a subset of your data before full deployment.

- Combine Techniques: RAG plus fine-tuning beats either alone.

- Monitor Ethics: Reducing hallucinations also means avoiding biases—always check.

- Tools to Try: Hugging Face for datasets, Weights & Biases for tracking.

- Community Input: Engage forums like Reddit’s r/MachineLearning for ideas.

By sharing this, we hope to inspire your own tweaks. AI is evolving, and collective progress benefits everyone.